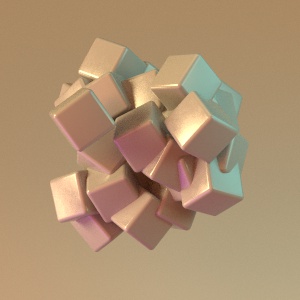

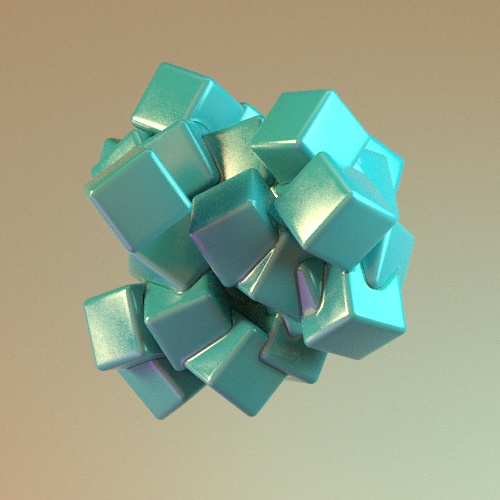

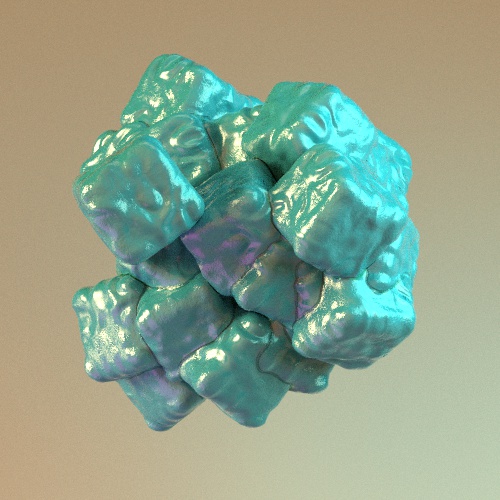

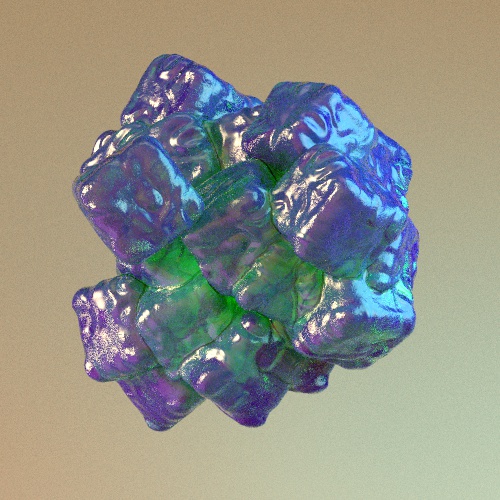

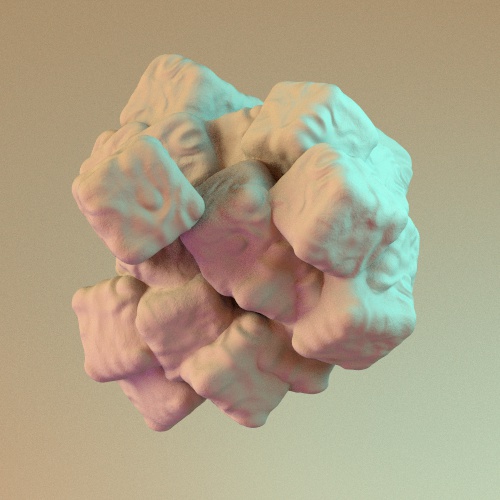

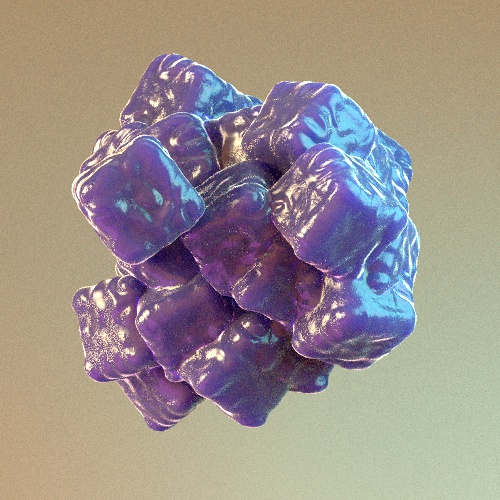

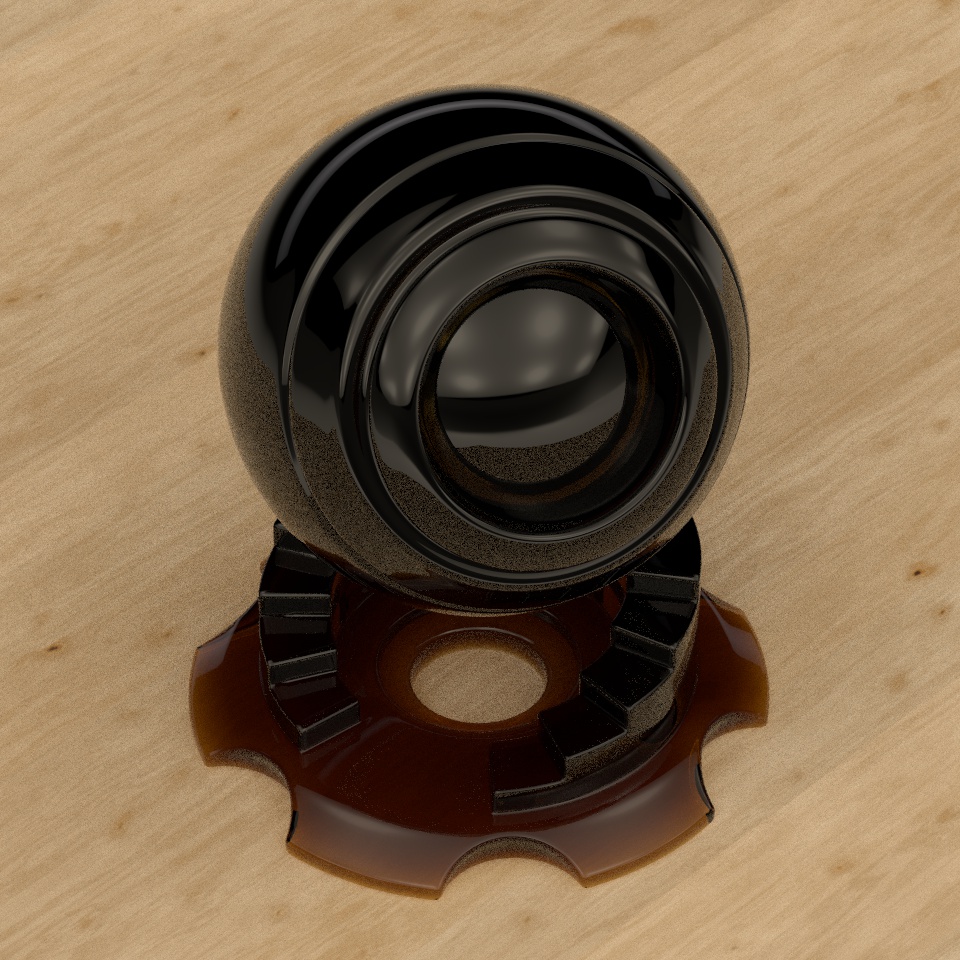

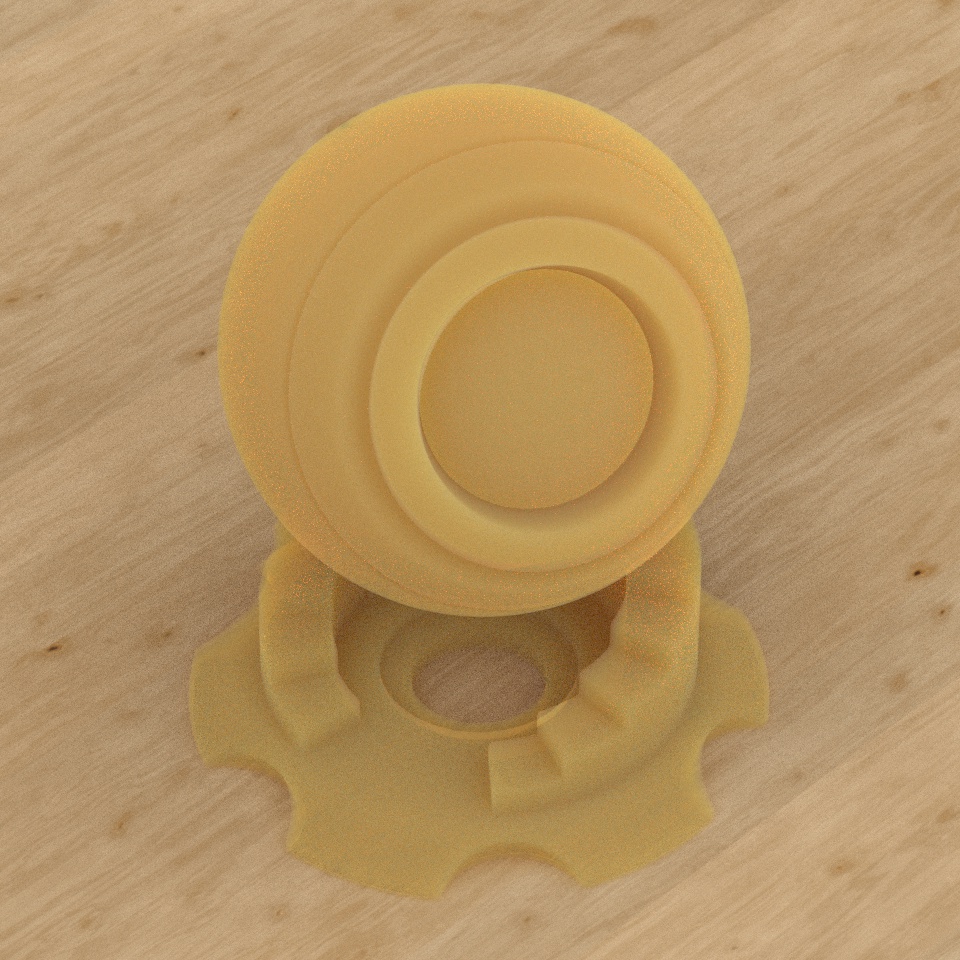

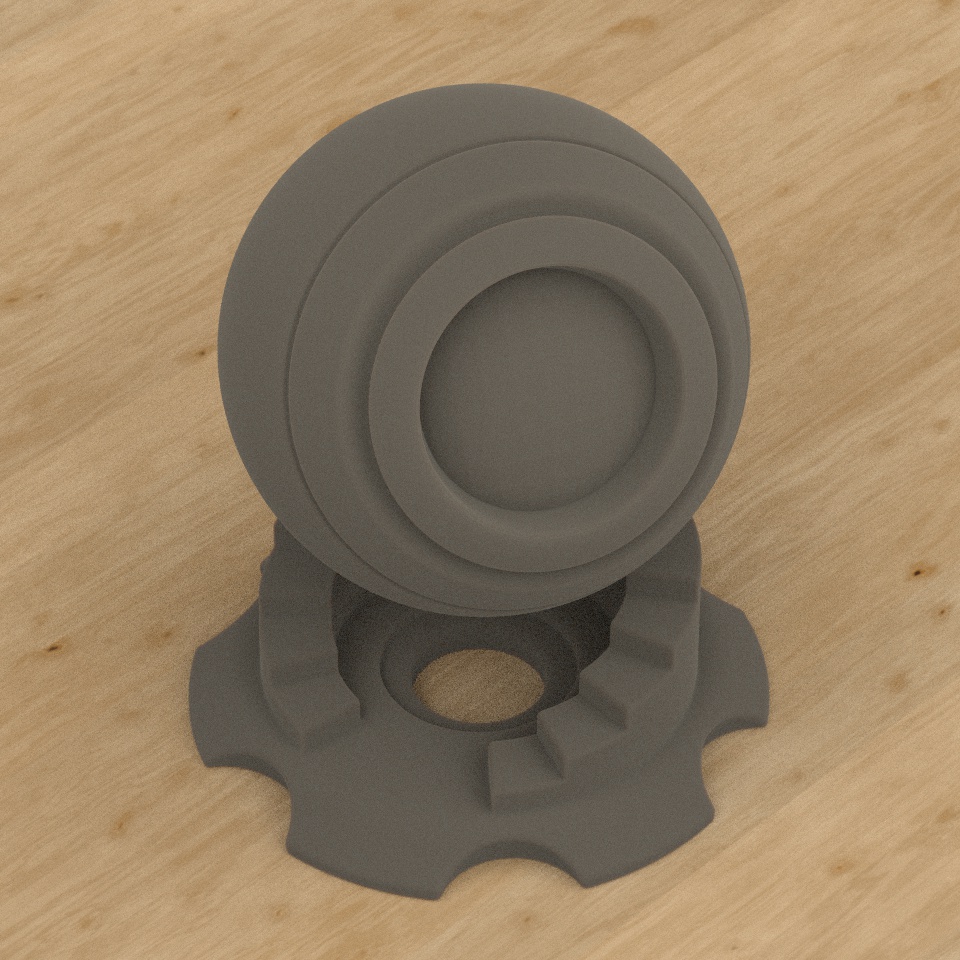

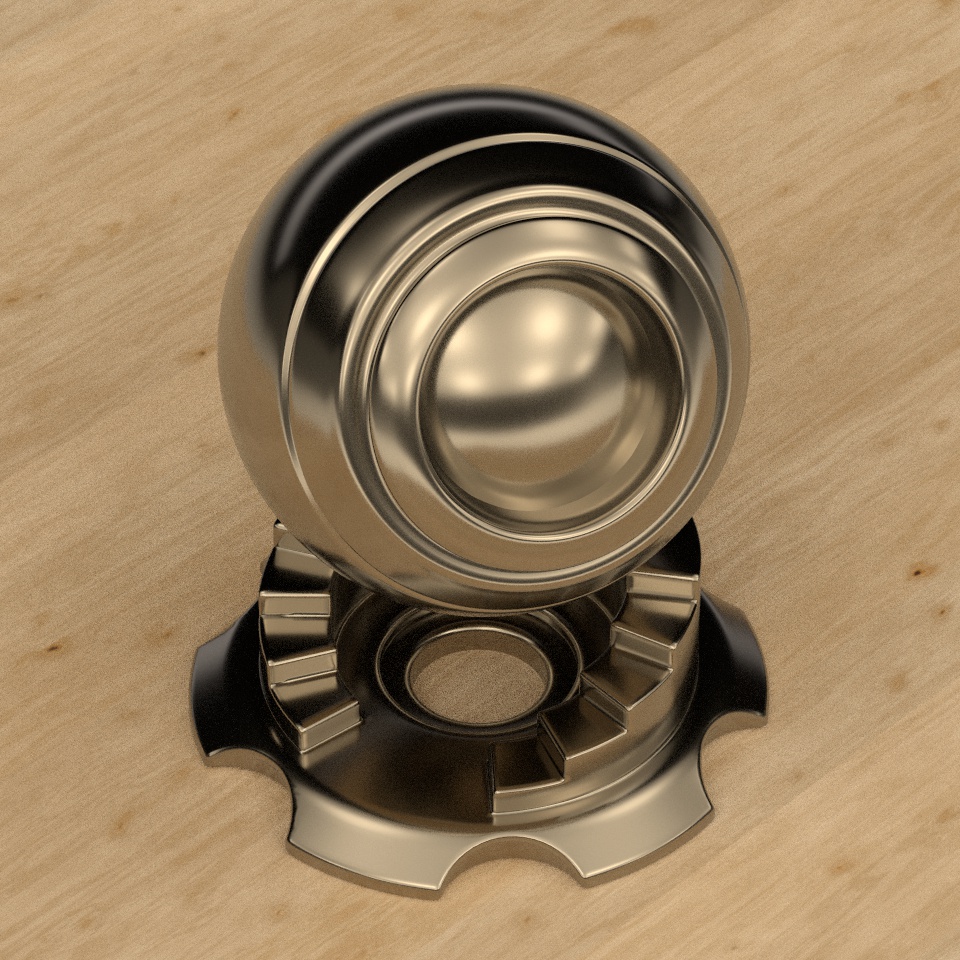

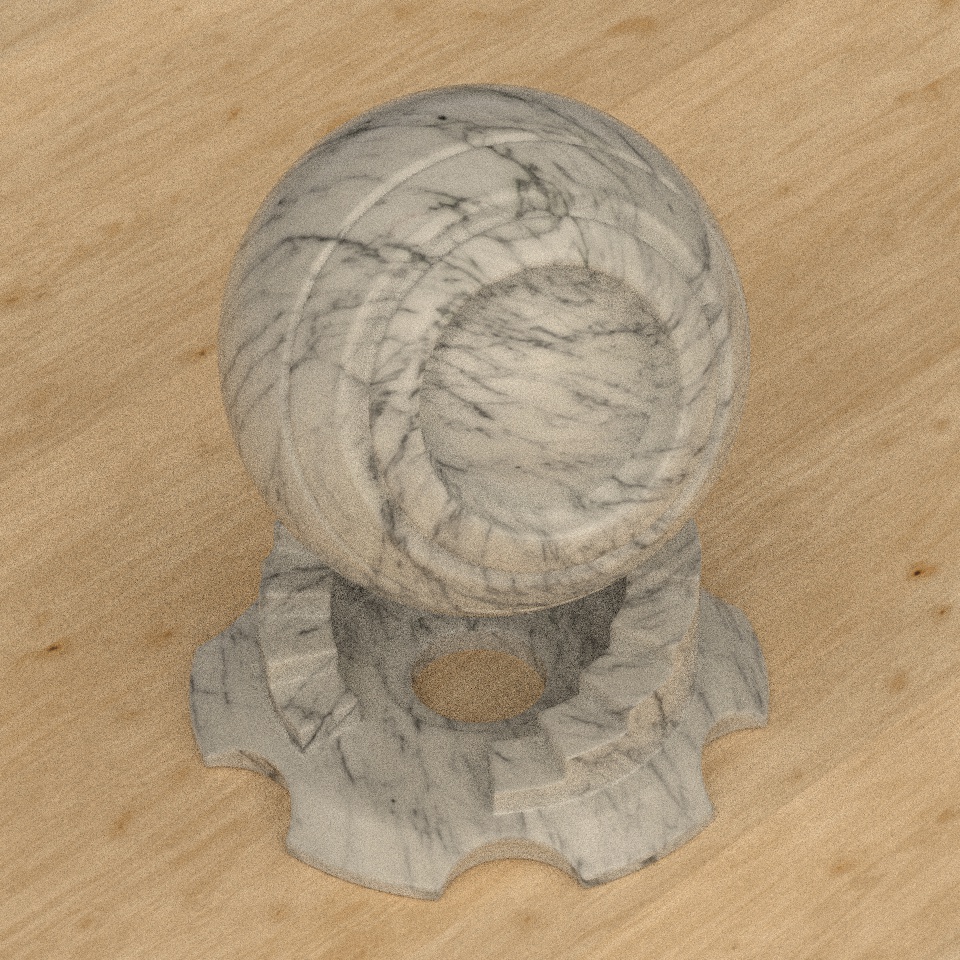

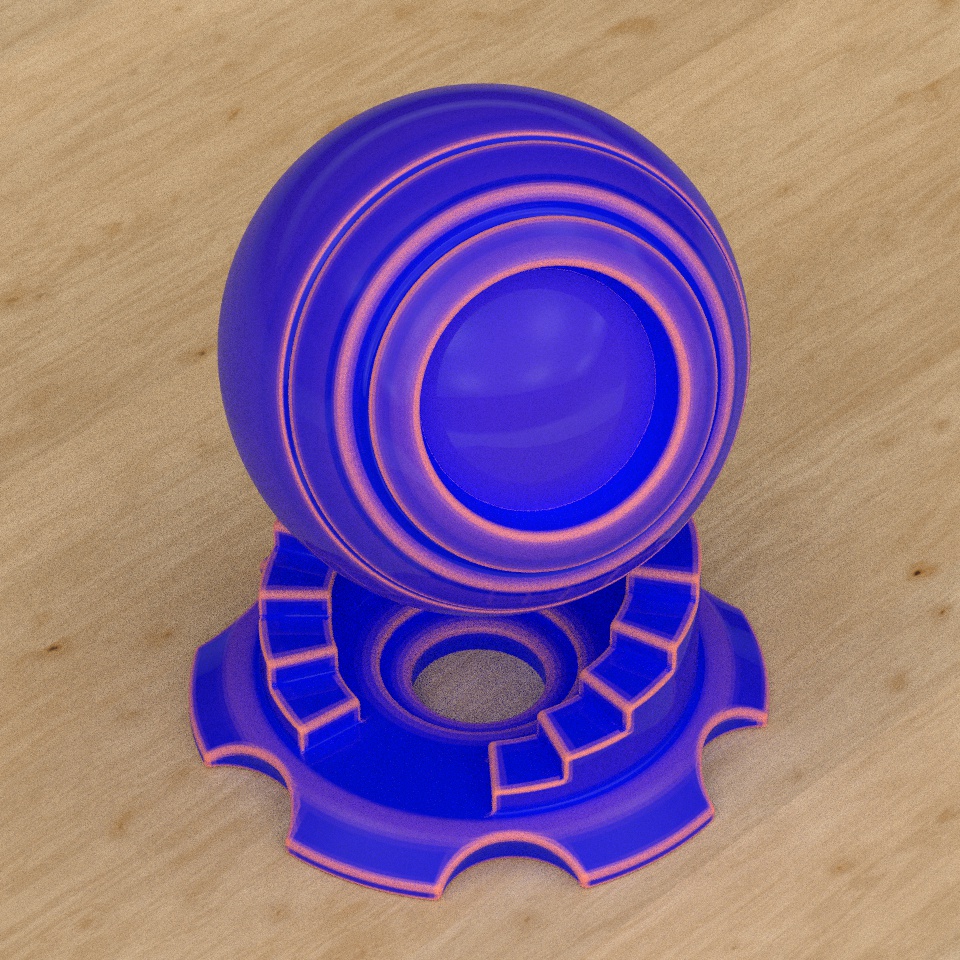

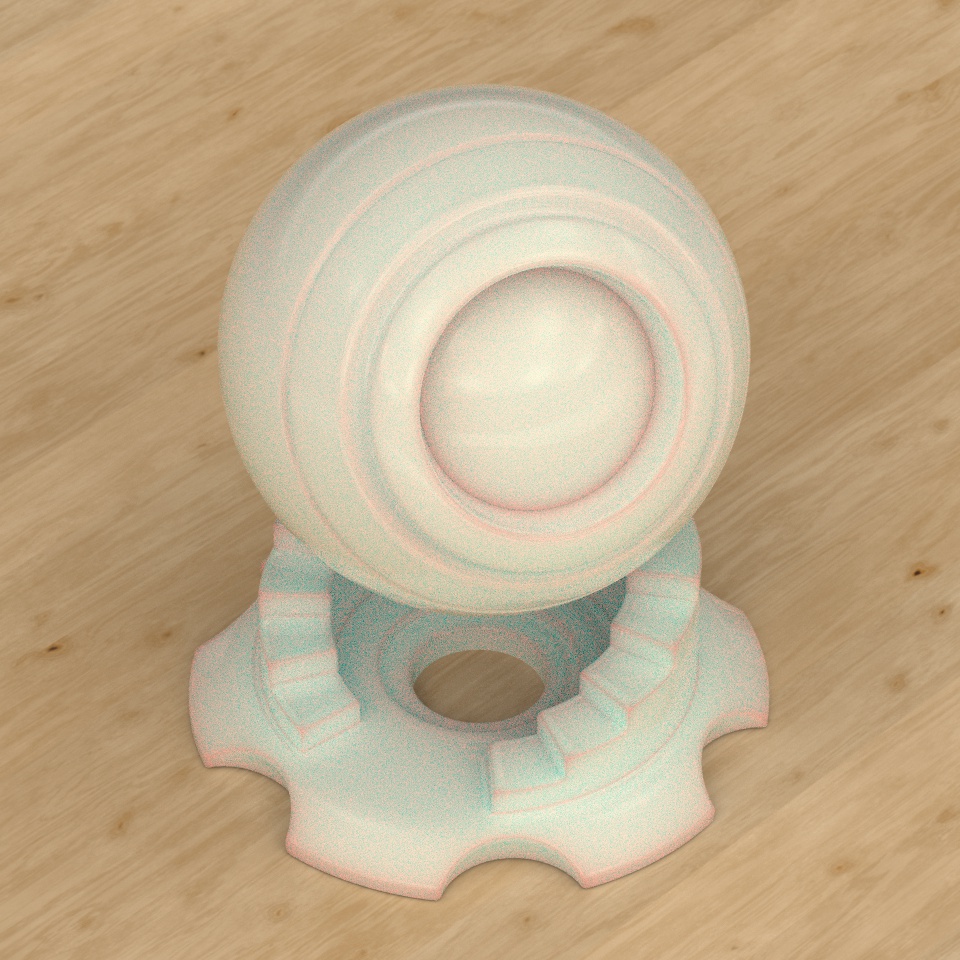

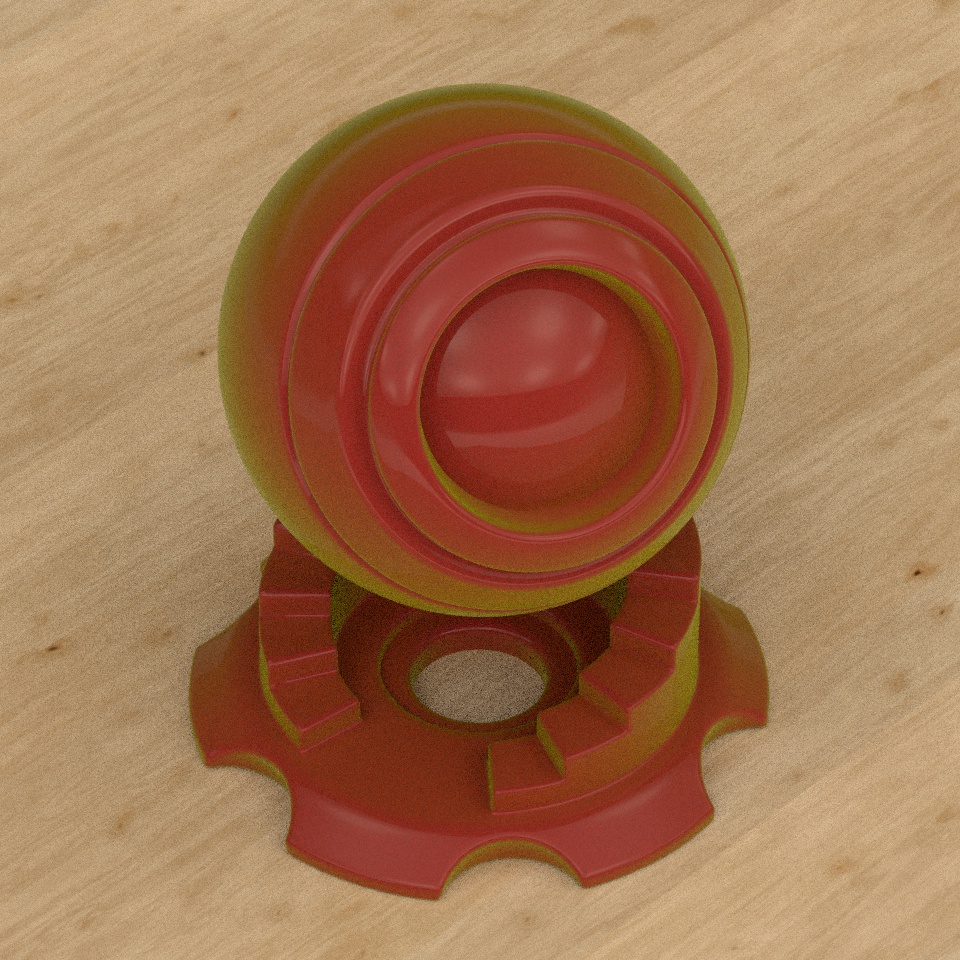

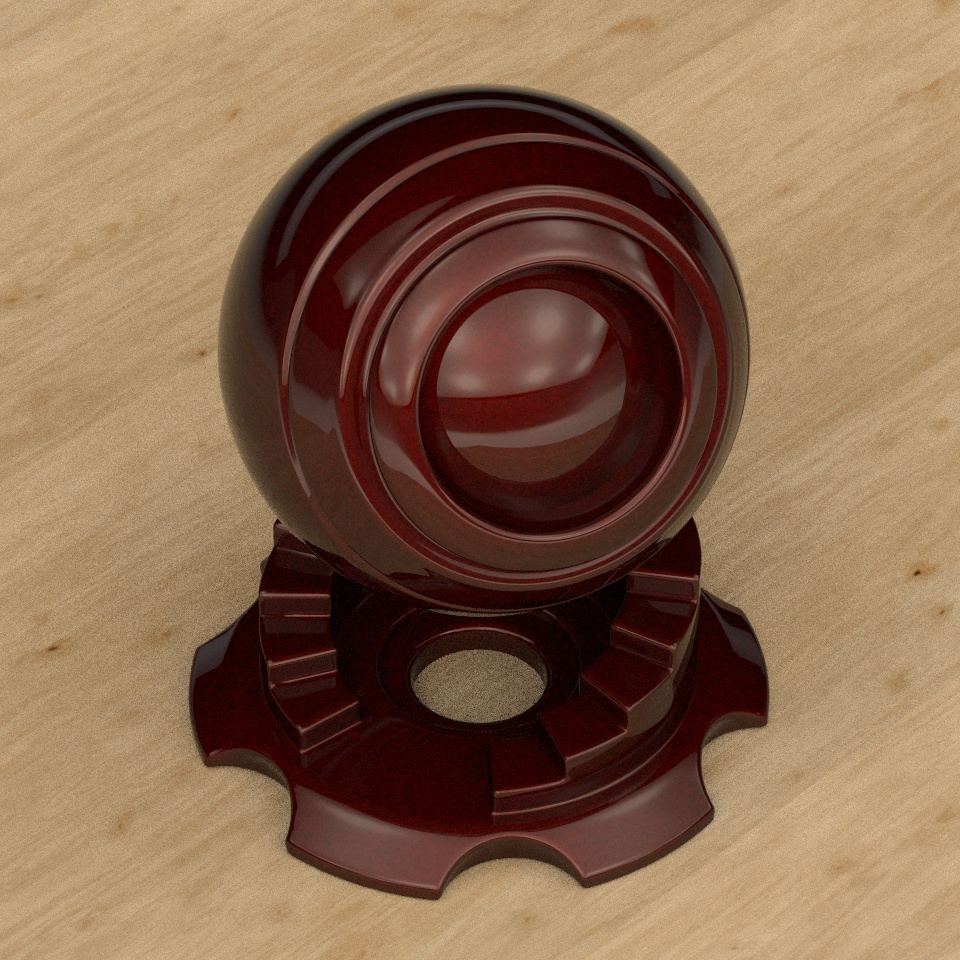

Redshift test with Displacement, Curvature, and Fresnel to achieve very different results with simple geo.

Category: skills

Experiment with Redshift Displacement. A cube with some noise.

Starting to play more with Redshift Shaders in C4D, building up effects procedurally. all these are under 2 second renders. The subsurface effects are super effective. Good to know I have these in my toolkit and that they are very cheap to render. GI with Greyscale Gorrilla HDRI link; pricey- but effective.

Just a bit of fun using a slicing method I worked out in houdini and two prusa printers, with me and Stella.

Summary (so far)

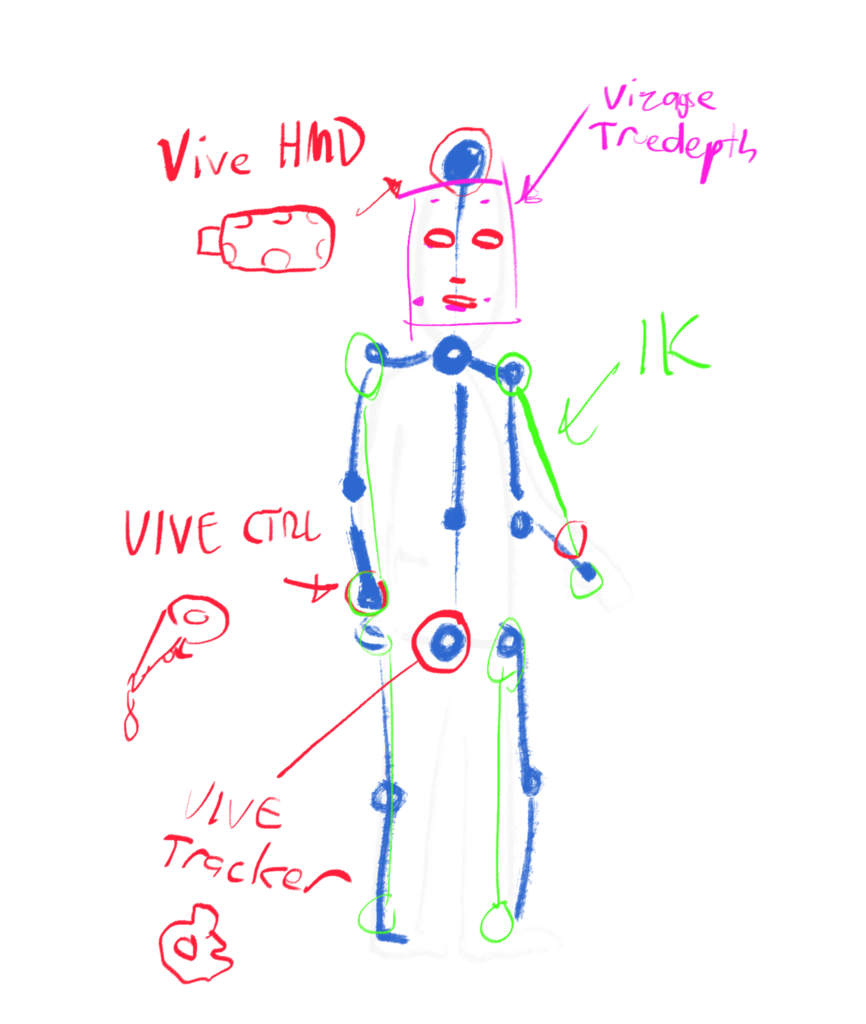

First, I up-skilled in Interactive and game design with Unity and C#. To integrate with my motion capture goals I focused on vector maths and quarteniorns, using input to manipulate kinematic animation with linear and spherical interpolation. I explored the potential of motion capture in unity using the HTC vive, but decided to move to Unreal as the support for Apple Arkit and the Live Link capabilities were much more robust and documented. I then became familiar with Unreal Engine and Blueprints. I experimented with Visage studio for face capture, but ultimately settled on Apple Arkit. I bought six vive trackers and designed my motion capture rig, including 3d printed adaptors and face head harness with an apple truedepth sensor for facial capture. I became familiar with the Unreal Live Link Face App for IPhone, and became familiar with remote UDP, OSC and file transfer using TCP capabilities, so the face capture component of my rig can be controlled using network commands. Once I had my body and face motion capture rig working in unreal, I became familiar with animation targeting in Maya Lt so that I can apply different animations to to different rigs. I also familiarised myself with animation blending as well as Pose Morphing and Blendshapes for face. With the technicalities settled, I moved on to on to the aesthetic components. I had used the Universal and Lightweight render pipeline during my unity up-skill, but I felt like something more visually powerful was needed, as well as a way to create procedural effects with the motion data. I upskilled in Houdini, creating procedural volumetric effects and particle simulations over motion capture data bound to a kinematic mesh. Finally, I learnt how use Redshift to create physically based renders via my GPU to create an end product that is visually appealing and economically viable. I also built my website, virtuallyanything.xyz as well as populated my progress blog with development update and livestreams. The time spent on this project has been extremely useful for my artistic and professional development. Transitioning from animator to interactive designer, leveraging my performing background into motion capture has future proofed my career. I have learned much more than I thought I would in this period; coding has been completely demystified and I have a working methodology to create motion capture performances using a working 8 point motion capture rig augmented with procedural animation and rendered with cutting edge GPU rendering. I mentioned using xbox kinect for motion capture in the application, but my solution is much more robust. I am now in professional discussion with music labels to implement my technology in creating create virtual videos as well as many performers in lockdown that need to transition their work into a virtual space. I feel communication though physicality is of importance to the stability of the social fabric- Victoria has been though a unique challenge. Though the methodologies I have gained in this period, my new business can react positively and with great innovation to the changing social landscape. I will motion capture performances of artists that have had their audiences and revenue decimated though closing of venues and restrictions on travel.

3d printed mo cap rig. works well. Vecro and chest harness from ebay. Foot buckles from thingiverse. The rest designed by me. The models aren’t the cleanest, but they do the job.

Retargetting animation from differing skeletal setups in Maya lt is fairly straightforward, plus the Maya character rigging tool full-body Ik Controllers are going to quite versatile.

Step One- Unreal

Motion Capture using using Mannequin skeleton with IK

Face Capture using ArKit

Retarget to custom skeletal

Refine animation and IK using blending etc

I’ve chosen Unreal because animation blending capabilities are robust and the VRMoCap plugin is an acceptable balance of cost vs quality. The downside is the use of the UE4 Mannequin. If I can find a solution to multiple vive trackers in headless mode in unity, i’ll consider it.

Step Two- Maya

Finesse key-frames

Avatar Modelling

Additional Re-targeting

Maya can easily re target skeletal setups and just a safe all-rounder to finesse the pieces. I can use the significantly cheaper Maya Lt and bypass MotionBuilder because Unreal has the animation clip blending functionally I am looking for.

Step Three- Houdini

Add Procedural Effects to baked mesh

Houdini will be used for aesthetic flavor. There is so much control in this program. I will play with Vellum and VDB as well as clone to points.

Step Four- Unity

Import to Unity, light and stage

Additional Re-targeting

Export to html 5

I’m really starting to see the benefits of Unity now- namly the build times, I can really cut out the unneeded bits, and also easily get it into html 5.

Step Five- Dreamweaver

Html 5 presentation UX

I think I need a separate post explaining why i’m going for web, but I think it’s the future and will serve the experience well. The capabilities of html 5 and game design in-brower experiences are serverly underexposed to the public and really underutilised. There’s so much to be excited about with html5 and i mean if I can get a motion captured performance into a 3d environment that works in just a web browser out of the box, what more do you want!

learning houding

Smoke Monster from Lost

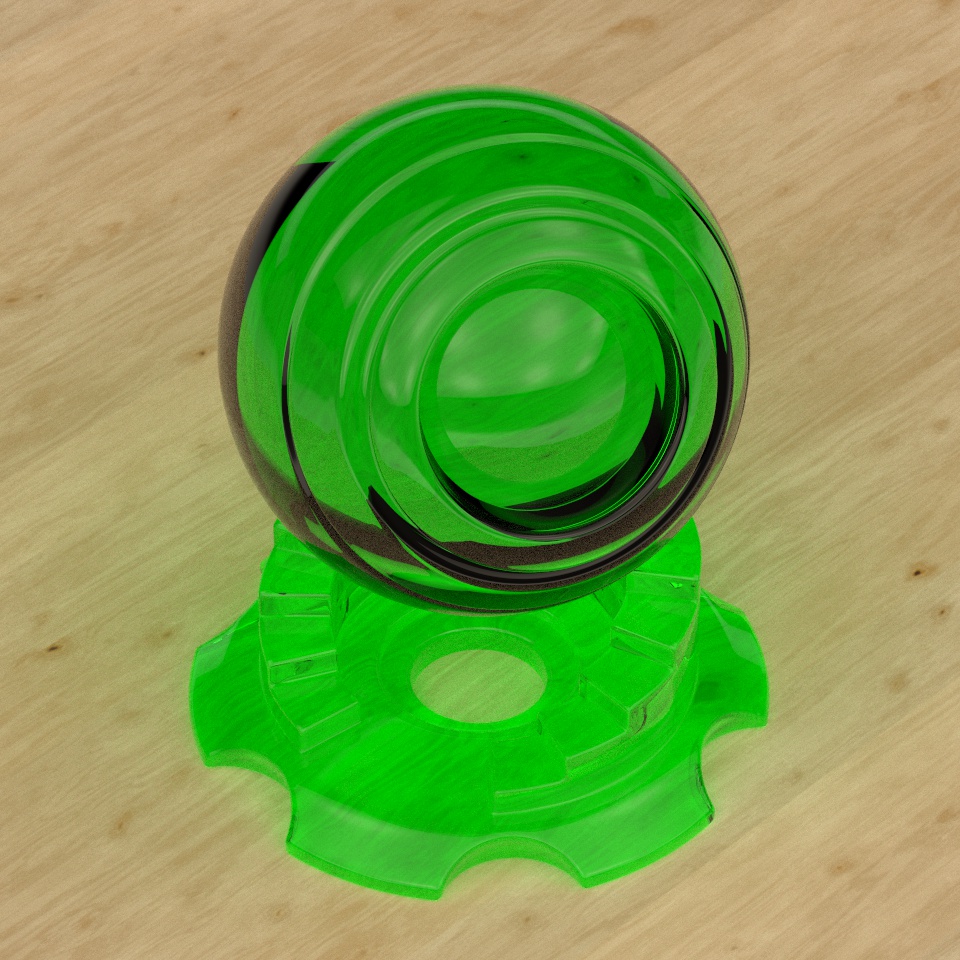

rendered in RedShift. Very speedy compared to the native CPU manta render.

Procedural- the ball will always touch the highest point of the y bounding box

First tests in Houdini. Got to start somewhere.

Also, here’s how i’m gonna capture the face data. Three 3d printed components, some wing nuts, and a bike helmet. I am sure it will work. The actor won’t be able to see where they are going, but that’s the price i’m willing to pay. It’s all about the face, and it’s going to be captured as a post process anyway because their face will be covered by the vive headset. At a later stage I can attach another vive tracker to the helmet.

Today has been all about recon still. I was going to just go ahead and buy a Rokoko Smartsuit because it seemed versatile, but then I realised it was not world-space calculated, but rather an accelerometer type setup. The customer service seems second to none, but the results are…questionable. The face tracking plugin and the mo cap software provided buy Rokoko does seem like a workable solution though, especially now I’m going with vive. Yeah, I’m back to Vive. It’s a tried and tested method of world space performance capture, and I already have most of the bits so I’ll proceed with that. It seems the standard amount of nodes for a body capture is six, so I’ve bought three vive trackers to get up to that level. Face, HandL, HandR, Hip, FoodL, FooTR. I’ll see how the IK is for the elbows and legs, but more would be better, perhaps another two on each shoulder, and one on thed as a virtual camera. I’ll explore this with the talent sitting down and take them off the feet.

For software, I’ll try Rokoko, There seems to be a consensus that the Ikenema is a cheap and very robust price of software, but it has been bought by apple and subsequently the site is pretty much down, and any reference of it taken off unreal marketplace. Very Suss. VR MoCap for unity is cheap enough for me to be not disappointed if it’s not good. It’s funny, This tech was cutting edge five years ago and it hasn’t gotten much better, just harder to access. I wonder if that’s because it was overhyped, investment wasn’t there, so the middleware has caved and been bought up by the whales, leaving AAA solutions…and crud.

As I said in an earlier post, I think I have been too hard on myself to make the work resulting out of this artistically significant, like I actually have something important to say. I’m not ready to take a position I have to justify. Anyhow, I’m hoping for this project to teach me, and the audience at similar rate, just offset. I do an experiment, I release the experiment. I need to give myself permission to make these things look nice. I’ve been quite against it up until this point, but It started to become inevitable when I lost three days deciding on render engines and literally couldn’t proceed without the whole project breaking before locking something in.

SO this is where I’m at. I can confidently use Unreal and Blender as tools, I am confident that I can use them as tools to create interactive theatre. This has taken a maintained lightspeed upskill that I’m super proud of. I’m confident I have the storytelling, directing and theatre experience to curate, light, decorate and present a piece. Should I just start doing this? No. I need to get back to aesthetics I feel. Perhaps I’m procrastinating, but I want to know that I can do some interesting tricks with motion capture footage. It’s all the rage- procedural physics and the like. It stands, out, it generate confidence for everyone. There’s only so so much ugly ass content I can post on an ugly ass site before the project may as well be I thing I do on Sunday afternoons. In other words, expect things to start looking nicer. Ive began to learn Houdini, which is a highly procedural program that I can apply my newfound coding confidence on, in an environment that feels a bit like C4d.

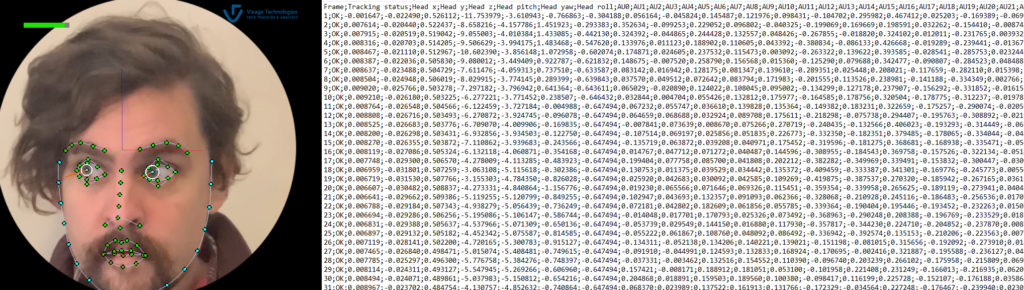

This is a breakthrough. I feel like I can sleep easy knowing this data can get to me. I can generate a .txt with floats of various tracking points and rotation of the eyes and head. ‘even if’ i just extract and construct a rotation vector3 from the first three columns i’m in a good place. Unfortunately i’m not clever enough to interpret this string into an array yet, i’d prefer a third party plugin because i feel like it’s wasted time getting my hands to dirty in this, like i tried with writing shaders yesterday, when there’s a perfectly good solution out there. I think it would a foreach loop. I’ll come back to this just because i’m interested. So- Visage is a solution. Fclone seems to integrate directly with motionbuilder and unity, so i’d like to try that now. Then i’ll step up to Rokoko Studio. It’s pressing because end of FY is next friday, so if i’m going to spend dosh, I should make a decision soon. Also, I need to have a look at Autodesk MotionBuilder. I purchased a subscription to Maya LT just so I don’t have to deal with Blender.

This week has been a hard one. I feel like every step requires ten others. Facial tracking is everywhere, but super expensive. The pieces are dangling in front of my nose.

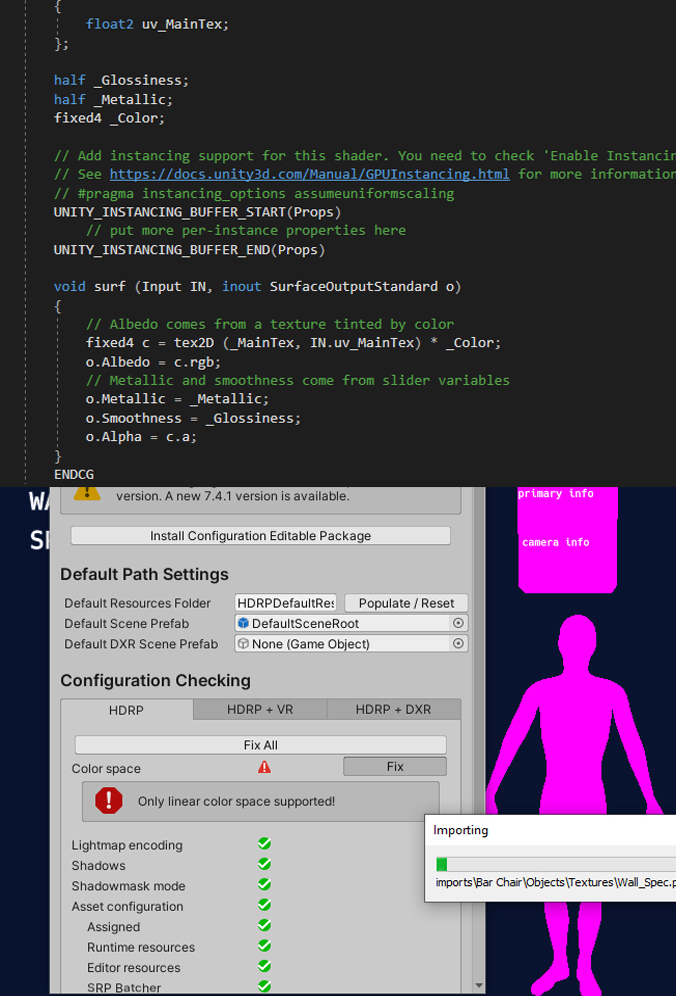

I’ve had to update to the Universal Render pipeline, and it’s broken everything. This is a major con with Unity. All these third party packages are starting to fight each other. I’ve enabled URP, but then video doesn’t work. I can go without because the native post processing is pretty nice, but then I have to write shaders from scratch to get the flexibility i need, particular fresnel. I love it so much, but practically, if i’m going to put a head in a fishbowl, I need the fresnel, and possibly refaction and that is just not conducive to the creative flexibility I want. A node based solution for shaders would be great, as long as it doesn’t slow things down. I’m thinking I need to just take a step back and think how this is going to be put together. Becauuse it’s VR, i think i want it on the lighter end, but also have great control of lighting. I spent a good part of today trying to write shaders, and now i’m comparing HD RP and LW RP, then I’ll choose, then i’ll get this houseboat floating downstream again. I was going to say freight train, or truck, but it’s more like a frontier wagon with things bolted on.

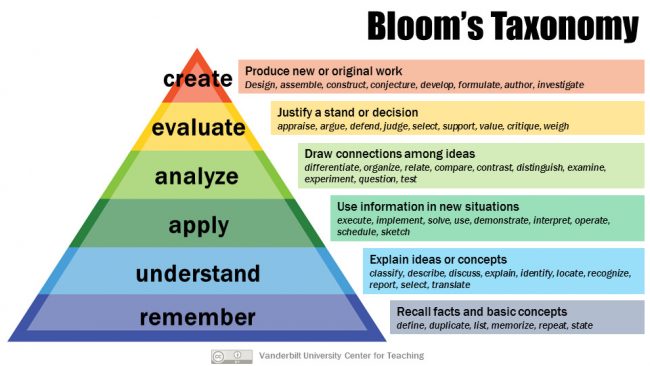

Finally getting a bit of humanity into this, things are getting weird. Also to cover my ass i’ve gone with the easiest option first to make sure I have a fallback plan in case the facetracking avenue isn’t viable. I’m starting to relax into this to be honest. I feel like straight up I was treating it with the auspiciousness of a phd, and it’s not. It’s just a big nerd upskilling and hopefully he’ll learn a thing or two… I really need to go easy on myself. An artist’s job is to ask questions, not solve problems. There’s now a meta version of myself in the game environment. Also, Bloom’s Taxonomy. I feel like i’m on the fourth teir now, i’m feeling a little overwhelmed but I can really put myself on the back for that.

Tomorrow I’ll move on at getting a fresnel and refreactve shder on that dome of mine. I’ll then have a crack at getting Visage working, and also this cheapskate Blender- face dots concept. After that, ArkIt, and then i’m going to have to lay down some money and probably get an actual mo cap suite. I might be able to put it off a little longer if I buy a kinekt or two. In the long run, knowing how to use these things is going to be very beneficial.

Next Step is face tracking. It’s important to get a move on actually recording a subject. A motion capture body surrogate myself at home as a post process, so it can come later. A solution is needed that the subject can just send to me using a mobile phone video capture. If I have to, I’ll use TrueDepth using an IphoneX but then it becomes a socioeconomic filter, I really don’t want to be just interviewing rich people. I would like to be phone agnostic. Turns out Blender and drawing dots on the face with a sharpie works a treat but I think that may be a little degrading for the subject.

| Package | Price | Pro | Con |

|---|---|---|---|

| Rococo Studio | $ $ 100+/- p/m | Excellent learning materials. Body and Face. Vive friendly. + + + + | Just TrueDepth for FT – – |

| DynamiXYZ | $489+ | great learning resources. AAA titles. + + + | Machine learning (tracking profiles) require time spent with performer – – – |

| F-clone | $60 | From video. Simple and versatile, maya, motionbuilder, unity. | Might be the one? |

| Visage | $ $ | FT from Video. (what I want) Seems accurate. Possibly lightweight. + + + + | Buggy at first glance. (Can’t get it to open.) – – – |

| Blender | FREE | Native face mo cap using a sharpie + + + | Humiliating for the subject. Consider stickers? – – |

| Optitrack | $ $ $ $ | Native camera system. Amazing products. + + + | Way overblown for this. – – – – – |

| Banuba | $ $ $ | Native integration with Unity. Slick Site. + + | Pretty basic functionality for the price. ‘Anchor a 3d object to a face?’ “Wear an afro!” Eat my hat, Banuba. – – – – – – – – – – – – |

Milestones

– established Visual style MVP, lighting, export, audio.

– comprehend C# OOP to an acceptable level

– greater comprehension of vector math:

– in particular quarternions and Ulers (mo cap -> augmented skeletal.)(big one)

– working with animations in unity.

– modify animations dynamically through IK, Skeletal Masks, Strafe sets, triggers.

Work to do before the end of the month

– Working with VR. using Vive

– Face tracking using ARKit

– Recording motion capture

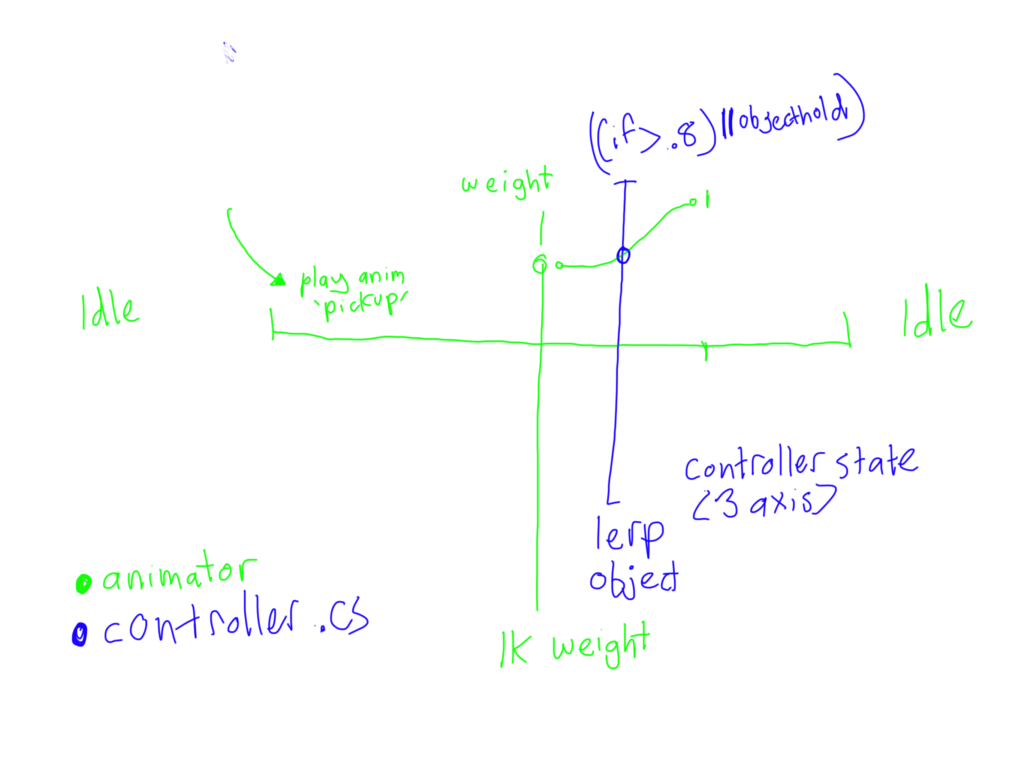

Being, a visual thinker, flow charts help. With today’s experiment there were a lot of variables that were needed to visualise over time. I’ve been considering the merits of scripting over unreal and blueprints , and i really can’t see any apart from the self satisfaction that you can look at text jargon and see shit like neo.

Refactoring

A note on refactoring- I feel confident now I can code, that I can make a game. But I am not a programmer like where I do my own tax usually by I am not a tax accountant. I use excel and home made spreadsheets and the tax accountant will go “uhuh. Let’s group all these things together.” I have so many redundant methods and variables. It works, but it’s dog ugly and in the long term it makes things run slow. For this practice, I am fine, and i’m getting better, and sure I would like to pivot my career more into this because it excites me, but that is not a why. I believe my tools can almost serve the purposes of this project, and so I must start to actually focus on giving this purpose. As a forewarning, I expect these posts to get less about what i’m learning and more existential. I have to push myself to step out of my safe zone here and actually have an artistic position. It’s now the time I need to start considering talent for the pieces. I need to kill the self and build a platform to serve the subject as truthful as possible. Curation will be the artistry here, not storytelling, not accounting, not programming.

For about three years I was a professional actor. I went through theatre school. I feel like the acting community at large kept me socially at arms reach because I rejected common shared values such as sentimentality and mystical thinking. And it’s true, I dislike actors. But I respect them. ‘Actors are like ducks’, a director once told me, ‘They are gliding beautifully on the surface, but under the water, their feet are furiously kicking to get anywhere.’ .

[INTERSECTING POST-DRAMATIC THEATRE AND VIRTUAL REALITY]

I have a theatre background, but i’m also an animator. I’m investigating if algorithms and humans can make beauty.

Formally, I plan to devise a best practice for digitally recording subjects in isolation and to create collaborative performance workflows.

This project, originally ‘Virtual Isolation’, is supported by the Victorian Government through Creative Victoria.

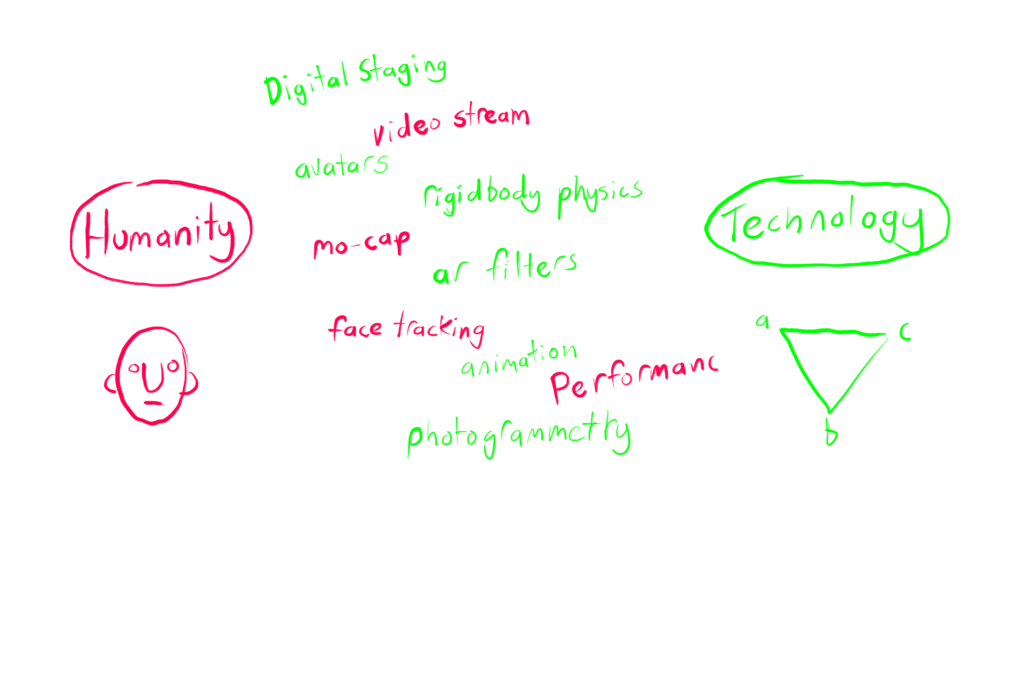

strategy.cs

I need to focus on getting performance captured at an acceptable accuracy and the performance abstracted at a level that the limits of each will meet in a pleasing manner. There’s a few things to unpack here. On the left is Humanity, the subject, the performer, the verbatim performance. On the right is Technology, the distribution medium, traditional live theatre at least, full animation at most. The other part of this is the technology bolstering or adding to the creative process. I can confidently say in this situation, digital staging can be implemented as a foundation that will not distract from human performance, it is filling the practical gap needed to fill for the distribution of the work, which is why i’m allowing it to encroach on the left. To what degree the subjects are digitally abstracted on the digital stage is another dilemma. What i mean by this is that the subjects could simply appear as video planes in a virtual environment, or augmented in a small way to give fake depth.

Realised practically, a footage plate of a recorded head could be a 2d sprite on a 3D body armature, I was even thinking perhaps in a ‘moon suit’ head bowl with digital refraction and reflection to disguise the simple footage plate. Another concept that I’ve executed previously is wrapping footage plates on 3d mesh to give the appearance of a head in 3d space building the cheeks and nose with topology. I realise I am not reinventing the wheel here, but I am combining a number of different methods to act as surrogates that fill in the limitations of each. I intend to layer performance.